Introducing a new database category - the predictive database

Antti Rauhala

Co-founder

August 27, 2019 • 4 min read

(Updated Septermber 9th, 2020)

Could machine learning be made radically more accessible and faster to use? How about, if you could get the predictions, the recommendations and other AI / ML functionality with queries like this:

{

"from": "engagements",

"where": {

"customer": "john.smith@gmail.com"

},

"recommend": "product",

"goal" : "purchase"

}In a typical tech environment it is extremely easy to find applications for AI / ML. The end users have gotten used to machine learning-driven features like recommendations, and such features can deliver huge business benefits. The ML related features are a common discussion topic both in the product teams and the management. There is simply an abundance of ideas and desire to bring ML to software.

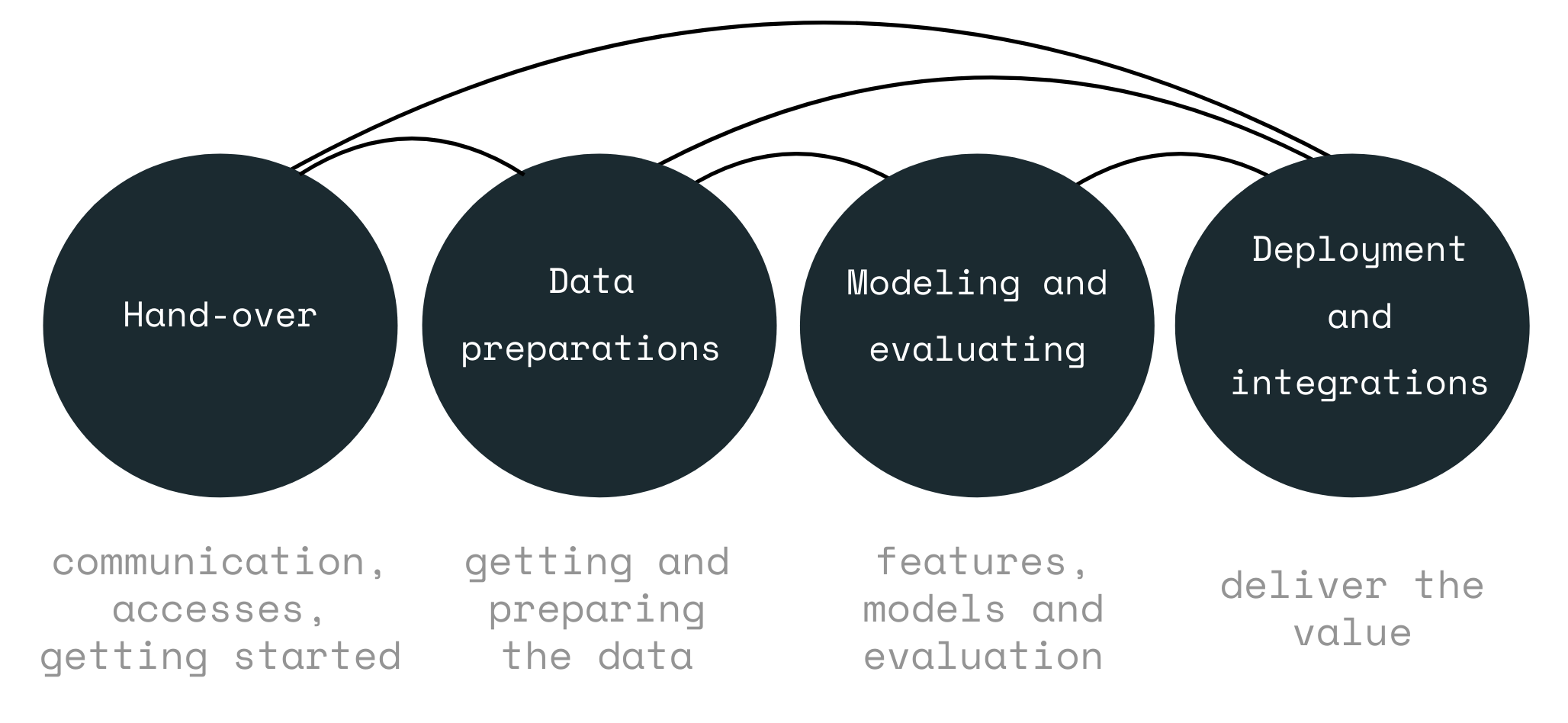

Yet, while machine learning is huge, it can be inaccesible for the average development team. Not every development team is armed with a data scientist. Even more, machine learning is often prohibitively expensive. The image below depicts one way to frame a typical machine learning project. What the picture omits is, that the process can take weeks or months, it can cost tens or hundreds of thousands of euros to get it through, and the results are not always what was the expected.

Few product teams can spare 'extra 50k€' to try a smart functionality that might improve the product. As a consequence most, maybe over 90%, of the value-adding smart functionality is not financially feasible.

Given all this, the true question is that could machine learning be made radically more accessible and faster to use?

The Predictive Database

To truly understand the significance of the predictive database, let’s consider the following scenario.

You have a database that provides you the normal database operations for your grocery store. You can use the database to list the historical customer purchases like this:

{

"from": "purchases",

"where": {

"customer": "john.smith@gmail.com"

}

}Now, your PO has done some interviews and found the customers complaining that filling the weekly shopping list is a huge hassle. What would you do? Perhaps you could use the following query for predicting the customer’s next purchases:

{

"from": "purchases",

"where": {

"customer": "john.smith@gmail.com"

},

"predict": "productIds",

"exclusiveness": false

}The query results will list the customer’s next purchases by the purchase probabilities. You can use it to prefill the shopping basket with the weekly butters and milks. It can also be used to provide recommendations.

But there is even more. How about, if you have some impression and click data, and your PO, your customers and the team itself desire the personalized search? Let’s try the following query to recommend ‘milk’ related products:

{

"from": "impressions",

"where": {

"customer": "john.smith@gmail.com",

"product.text": {

"$match": "milk"

}

},

"recommend": "product",

"goal": { "click": true }

}The query returns the products containing the word ‘milk’ by the probability the customer might click it. If the customer is lactose-intolerant, the lactose-free products will be listed first. As such, the query seamlessly combines the soft statistical reasoning with the hard text search operation to get the sought results.

There is a wide array of other ML-related problems that can be quickly solved with queries. For example, you can form simple queries to propose tags for products, to propose personalized query words, match email-lines with the products in the database or explain the customer purchases and behavior. As such, these simple queries can provide the intelligent user experience, the process automation and analytics.

The Technology

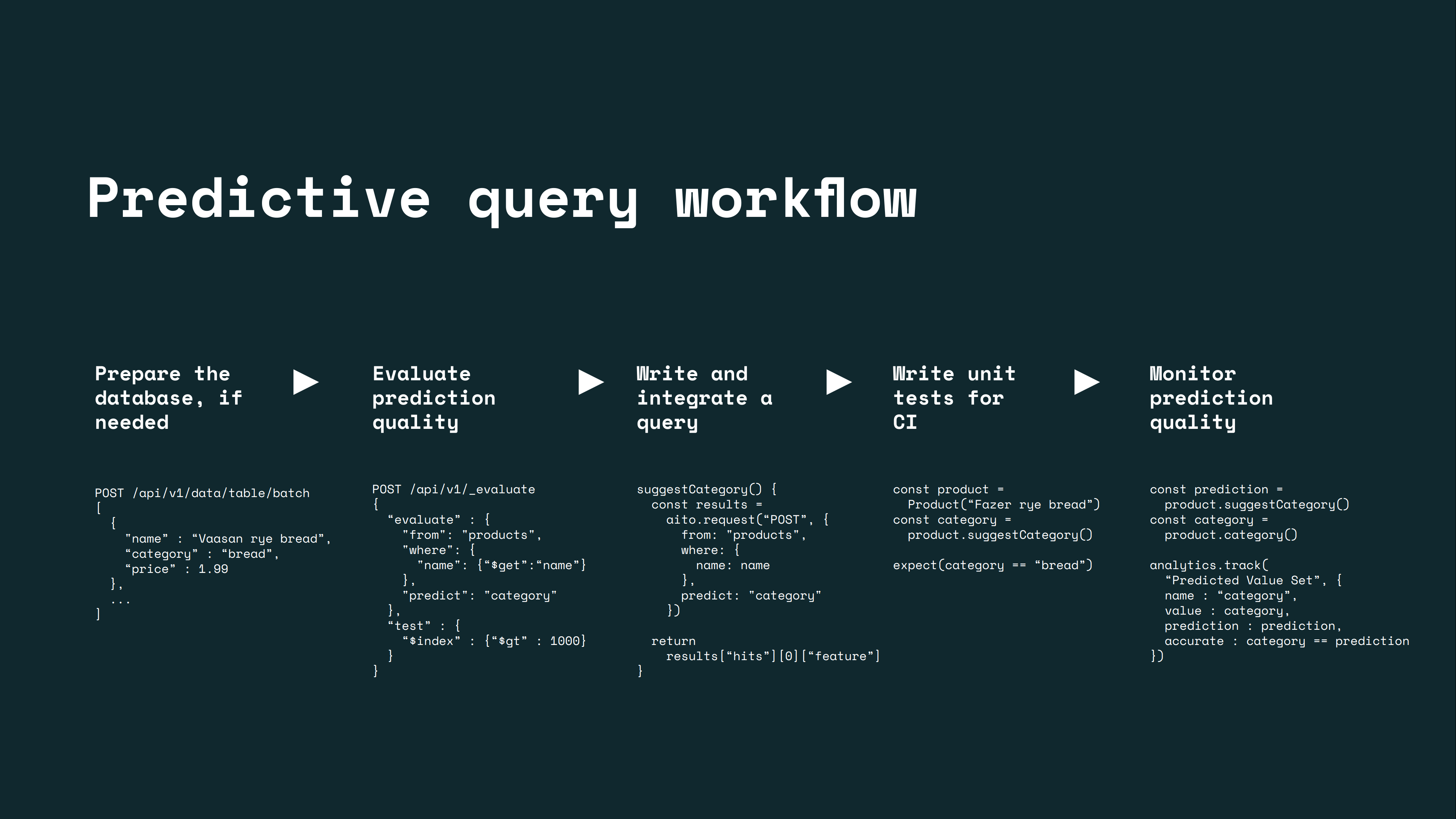

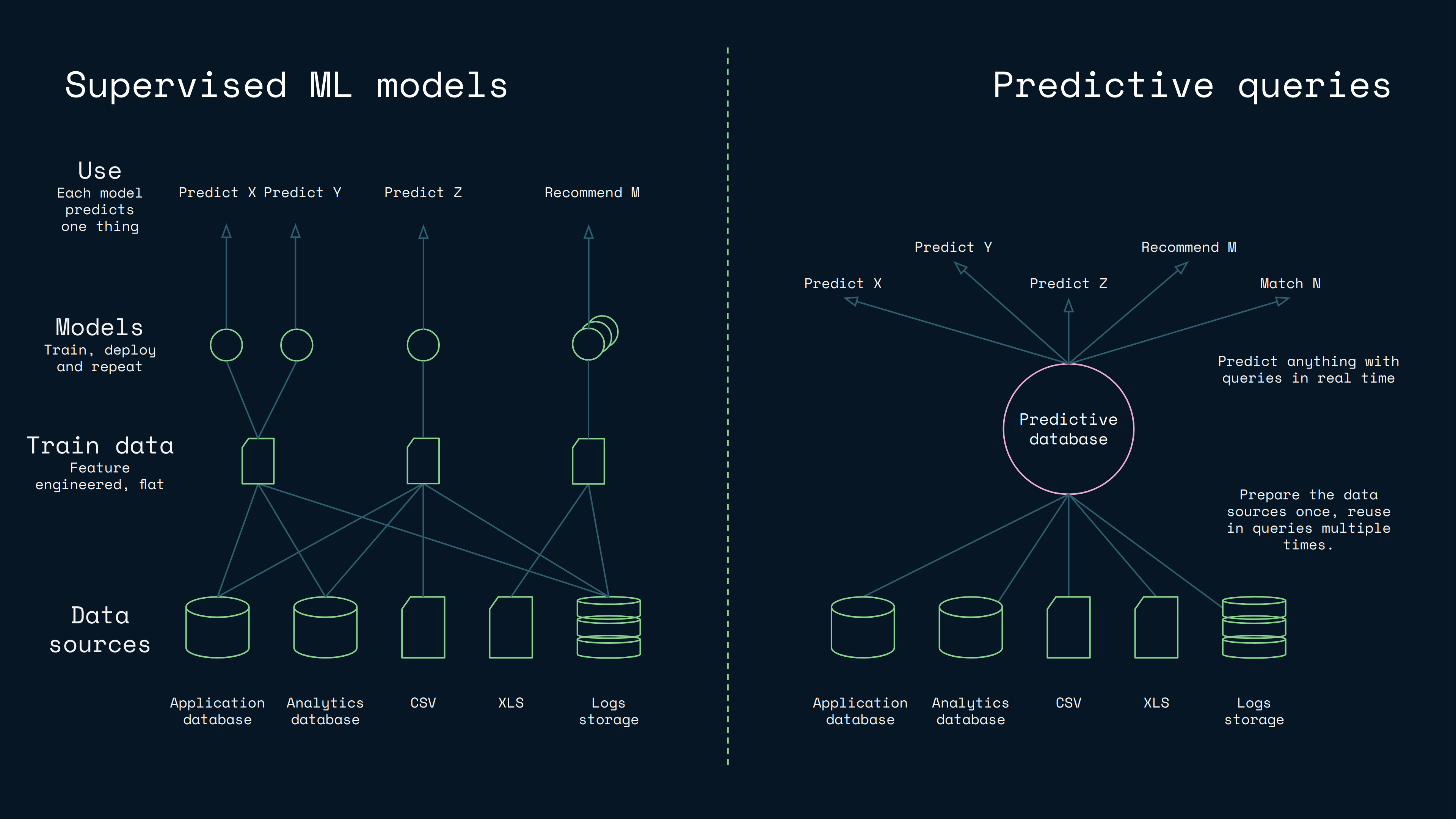

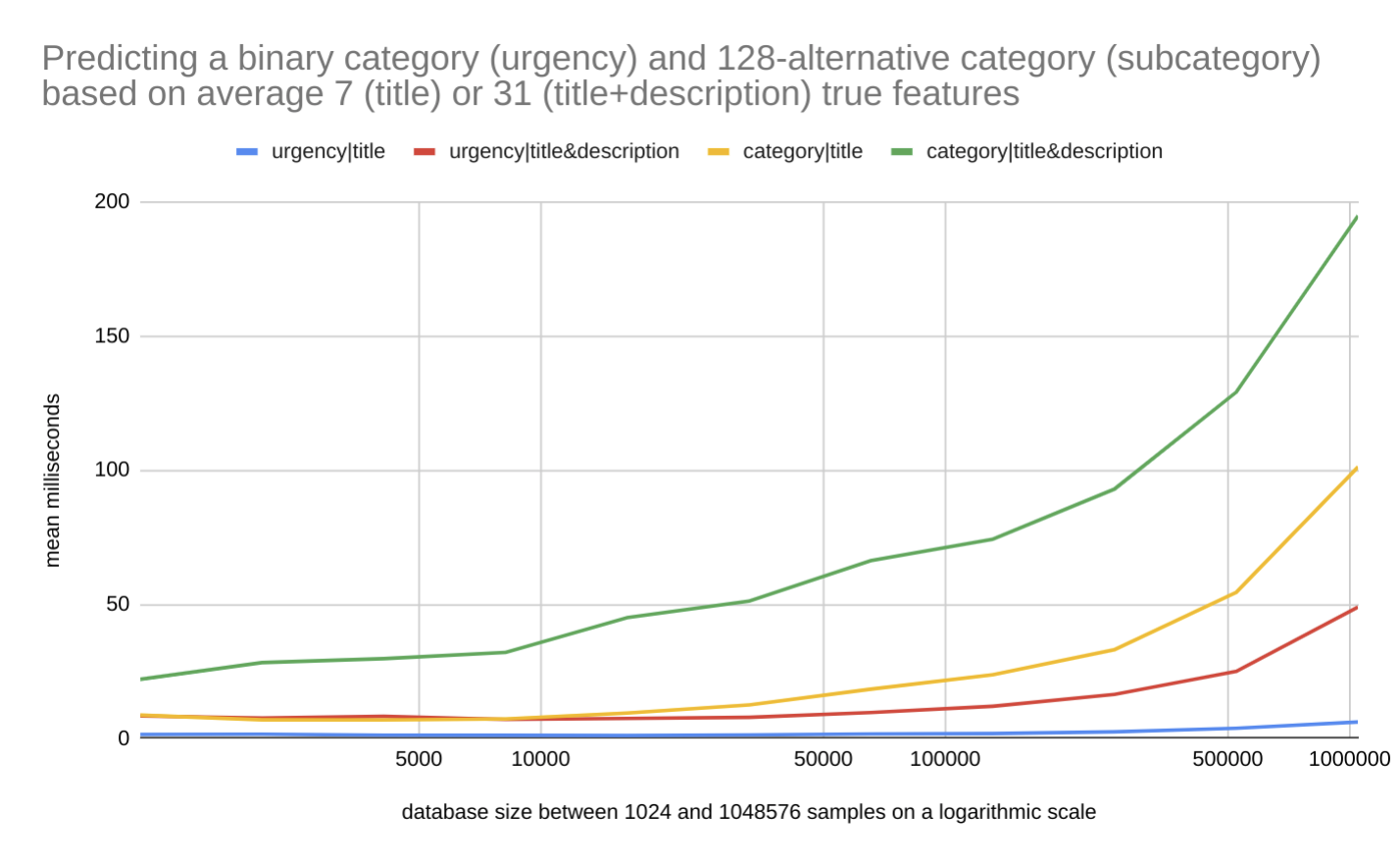

While the previous examples may sound visionary, we have succeeded in realizing the vision. It occurs, that by integrating the machine learning deeply in a database, it is possible to optimize the model building to a level, where you can create lazy statistical models in milliseconds. This lets you receive a query, build a model for it, use it, and return an instant response. This offers a radically faster workflow and simplified architecture compared to traditional supervised machine learning:

For this reason we have build a custom database, that is throughoutly optimized for the machine learning operations, and we deeply integrated a machine learning solution in the database's core. As such, this creates not just a new kind of database category, but also a new kind of machine learner. Instead of needing to train separate models for production, you can just ask for predictions and get instant answers, just like how the AI works in movies.

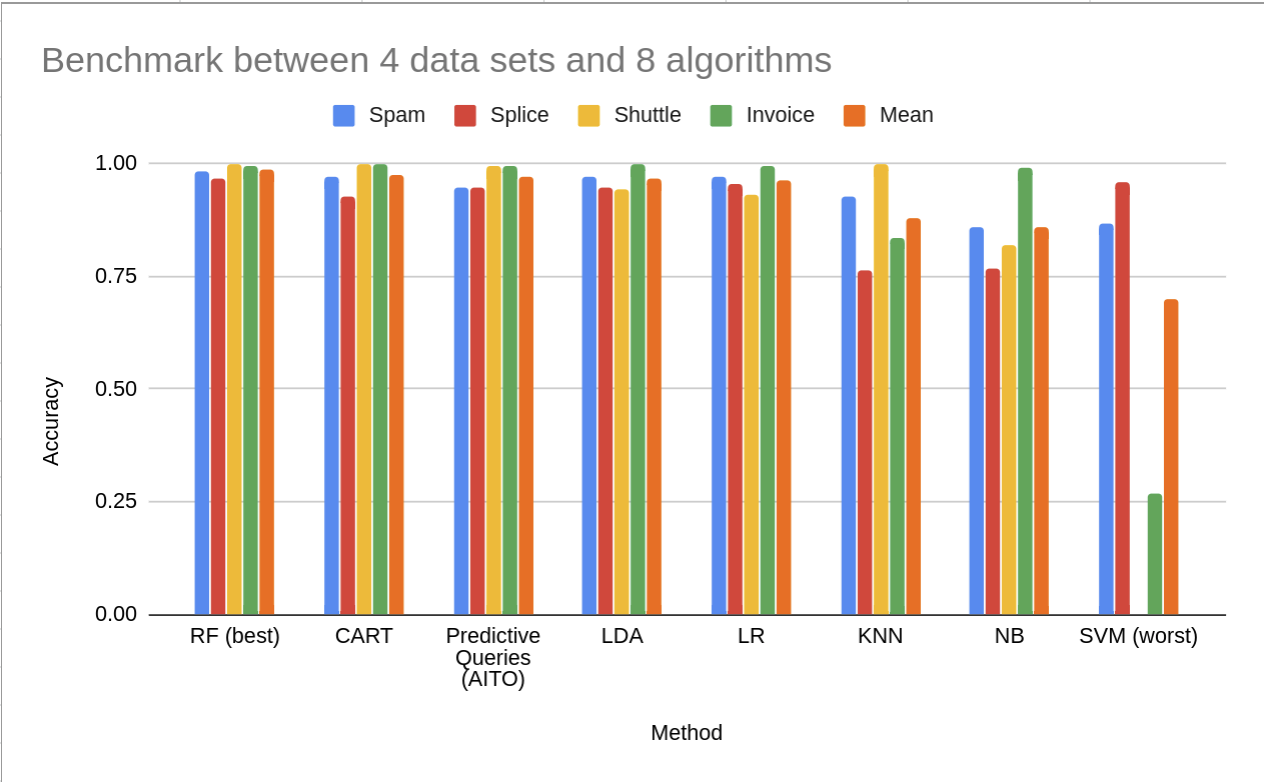

While the lazy machine learning models are created in the millisecond timeframe, the prediction accuracy can remain relatively good. This is explained by the lazy learning's ability to model the inference more locally and in a more query specific manner. You can read more about this topic in "Could predictive database queries replace machine learning models?" blog post

The Impact

The dramatic reduction of cost in implementing the smart functionality with queries means that it becomes economical to:

- Add the ML functionality into the internal tools, PoCs, the MVPs and the smaller products.

- Add the numerous smaller ML functionalities, like the little things that help ease the users’ lives.

- Make the software thoroughly smart and include all the smart functionality from the ML features’ buffet.

Overall, the changed economics can work to democratize the AI and make AI affordable; it could fundamentally change the way the AI/ML is used.

Meet Aito

This vision of the predictive database was implemented by our startup called Aito. Our product, also called Aito and it is offered as a SAAS for the developers around the world, and it is already being used in production by several companies.

As a predictive database, Aito lets you query both the known facts like a normal database and the unknown like the AI / ML solutions. It also lets you seamlessly combine the database filtering with the machine learning scoring functionality.

Aito exists to support the developers, who value quick time to market, and who are looking for powerful, yet simple tools, that can solve a wide range of machine learning and data related problems.

Interested? Check out this documentation to have a closer look – or try it out so we can show you how it works for you.

Back to blog listAddress

Episto Oy

Putouskuja 6 a 2

016000 Vantaa

Finland

VAT ID FI34337429