How to evaluate your results with Aito

Maria Laaksonen

Customer success engineer

February 4, 2020 • 4 min read

Model is the brains of the machine learning algorithm. It's the part that will make the necessary deductions from the data to create results and which can be tweaked to make your results more accurate. But if you tweak the model, how do you know if your results are getting better or worse?

That's when model evaluation comes into play. In this article, we go through how to make sense of your Aito results, i.e. how to evaluate your Aito queries.

Introduction

In software engineering terms model evaluation resembles system testing. In system testing you're trying to verify that the completely integrated system meets its requirements. In model evaluation the system you're trying to test is the model and the needed accuracy for the model is the requirement. Though the point of testing the model isn't only about finding if it works as intended, but also to be able to improve the model according to the metrics and get better accuracy in your results.

In Aito the model you’re evaluating and improving is the query you’re defining. For example, in this blog post we discussed how to choose a sales rep for a lead. We could first evaluate the performance of the model in the example, where we use the predict endpoint, and then we could test if the proposed improvement of the model, using the recommend endpoint instead, would really improve the results we get. Based on the results we could then tell whether using the recommend endpoint is a better option than the predict endpoint.

So evaluating a model is an important step of your workflow when implementing machine learning into your software.

Evaluation in Aito

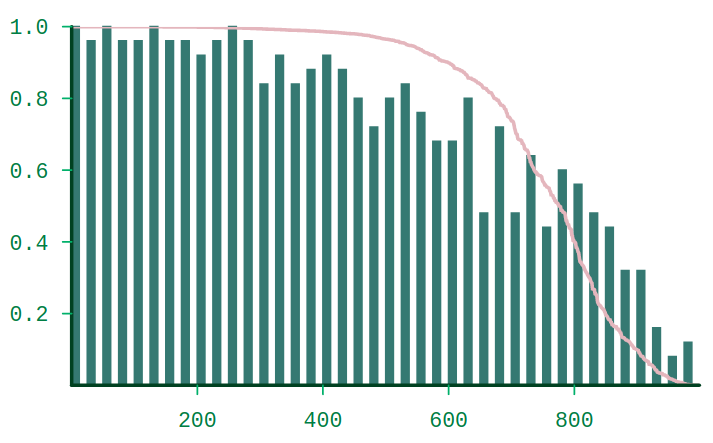

Aito comes with built-in functionality for model evaluation. Evaluate endpoint lets you choose your test and training dataset from the data you have uploaded into Aito, runs the evaluation for the defined query and then outputs the evaluation metrics. From the metrics you can get the accuracy of the model and for example, create a measurements vs. estimates plot.

Numerous methods exist for model evaluation. A test set (data unknown to the model) has to be defined in all methods. A test set is necessary as we want to avoid overfitting which means that the model will work perfectly with the existing data but is prone to fail with new data. A training set is the known data for the model. Both test and training sets contain the true label for the target variable, for example, in the sales rep example, the data includes the label of the sales rep ID.

In Aito the evaluation is based on the holdout method so the data is just simply divided into a test set and a training set. In the evaluate query you can select a test set from your data and the rest is used in training. The rows in the test set are used as an input to Aito for which it returns a label's probability that is calculated based on the training set. The result from Aito is then compared to the true label of the test set row.

Sales rep example

Now let’s go back to our sales rep example. If we would like to evaluate our original query, we could send it to the _evaluate endpoint as follows:

{

"test": {

"$index": {

"$mod": [4, 0]

}

},

"evaluate": {

"from": "sales_reps",

"where": {

"country_region": {"$get": "country_region"},

"city": {"$get": "city"},

"company_size": {"$get": "company_size"},

"annual_revenue": {"$get": "annual_revenue"},

"total_revenue": {"$get": "total_revenue"}

},

"predict": "sales_rep_id"

},

"select": ["trainSamples", "testSamples", "baseAccuracy", "accuracyGain", "accuracy", "error", "baseError", "alpha_binByTopScore"]

}In the example, we’re defining the test cases in the “test” parameter as: take every fourth row from the data starting from index 0. The evaluated query is defined in the “evaluate” parameter and is the same as the one used for the _predict endpoint, except that now, instead of defined values, we use the $get parameter. $get lets us access the values per row. In the select parameter, we’re defining the metrics we want to be returned. Without the select parameter, a lot of different metrics are returned. For more on the response metrics for evaluate, you can check our API docs.

When evaluation takes time

Often the evaluation of a query can take a long time if you have a lot of data. The timeout for the regular Aito endpoints (predict, match, similarity, query, recommend, search, relate and evaluate) is 30 seconds due to security reasons. To circumvent this we have released the jobs endpoint which you can use for all of the regular endpoints, though the only endpoint where queries should last for longer than 30 seconds is the evaluate endpoint. If you’re running into this problem with the other endpoints, please contact us in Aito Slack.

The use of jobs endpoint is quite simple. Just add jobs into your endpoint path, the body will remain the same as in the regular endpoint. So instead of using the path /api/v1/_evaluate use /api/v1/jobs/_evaluate, simple as that.

After creating the job, it will go to a queue and the request will return you the ID of the job you can then use to check its status or get the results. You can also use the jobs endpoint to return all of the jobs currently available. Please note that the results of the jobs are not available forever but they have a defined lifespan. You can get the lifespan from the “expiresAt” parameter which is returned when asking for the job status.

Usage of the jobs endpoint:

- Method: POST

- Endpoint: /api/v1/jobs/your-query-endpoint

- Body: The same as used for the normal query endpoint

- Result: Job ID, startedAt and path

- Method: GET

- Endpoint: /api/v1/jobs/

- Body: None

- Result: Job ID, expiresAt, startedAt, finishedAt and path

- Method: GET

- Endpoint: /api/v1/jobs/your-job-id

- Body: None

- Result: List of job ID, expiresAt, startedAt, finishedAt and path

- Method: GET

- Endpoint: /api/v1/jobs/your-job-id/result

- Body: None

- Result: The same as from the normal query endpoint

Documentation for the jobs endpoint can also be found from our API docs.

We <3 Feedback

If you have any feedback on the evaluate or jobs endpoints, join the discussion in Aito Slack!

Back to blog listAddress

Episto Oy

Putouskuja 6 a 2

01600 Vantaa

Finland

VAT ID FI34337429